💼

Work8,228💼

Business6,408🔍

Industries775🧠

AI507🚀

Models78📚

Large Language Models44🧪

LLM testing4Langtail

Go to 💼 Business

📢

Marketing

(2149)

🔍

Industries

(775)

💾

Data

(762)

💼

Sales

(685)

💰

Finance

(364)

💡

Startups

(289)

👔

Management

(266)

👔

HR

(228)

⚖️

Legal

(215)

📈

Business strategy

(184)

📅

Meetings

(119)

📊

Product management

(114)

🔒

Security

(73)

📞

Calls

(37)

💻

Tech support

(34)

🤝

Networking

(30)

💻

Virtual employees

(25)

🚀

Business innovation

(13)

🤖

Enterprise

(12)

FirstSign AI

FirstSign AI

CleeAI

CleeAI

Bika.ai

Bika.ai

Avataar's GenAI Creator

Avataar's GenAI Creator

Neferdata

Neferdata

AI Agents

AI Agents

Articula

Articula

Activepieces

Activepieces

Ridvay

Ridvay

Cognition by Mindcorp

Cognition by Mindcorp

Calk AI

Calk AI

Kong.ai

Kong.ai

Abstra

Abstra

Cubeo

Cubeo

Quixl

Quixl

UiPath

UiPath

Pipefy

Pipefy

Olivya

Olivya

Omniaseo

Omniaseo

DoubleO

DoubleO

20,984

13,180

8,081

2,276

2,078

1,461

1,313

1,239

1,149

1,002

934

730

533

500

494

463

437

407

363

349

Go to 🧠 AI

📢

Prompts

(231)

🚀

Models

(78)

🔄

Workflows

(78)

🤖

Agents

(77)

🧠

Ai integration

(7)

🧠

AI memory

(5)

🧠

AI inference

(4)

🔍

Deepfake detection

(3)

Belva

Belva

Elham.ai

Elham.ai

Mistral AI Studio

Mistral AI Studio

LangWatch

LangWatch

BotX

BotX

Superface

Superface

Aicado AI

Aicado AI

Plumb

Plumb

Buildship

Buildship

SuperDuperDB

SuperDuperDB

Api4ai

Api4ai

Geekflare Connect

Geekflare Connect

Hopsworks

Hopsworks

Retool

Retool

BraintrustData

BraintrustData

Aureo

Aureo

Emly Labs

Emly Labs

Kortical

Kortical

iyed

iyed

Momo

Momo

12,772

11,638

6,931

5,744

4,517

3,672

3,384

1,866

1,753

1,041

982

897

691

659

628

484

476

444

435

239

Go to 🚀 Models

📚

Large Language Models

(44)

🧠

AI model comparison

(15)

🧠

Model training

(6)

Teachable Machine

Teachable Machine

H2O.ai

H2O.ai

Codenull

Codenull

Lmql

Lmql

Obviously AI

Obviously AI

Gradio

Gradio

Brancher

Brancher

Lightning AI

Lightning AI

InvictaAI

InvictaAI

Remyx

Remyx

SapientML

SapientML

Nexa SDK

Nexa SDK

Yourself commercial model figure

Yourself commercial model figure

5,483

5,372

4,242

3,926

3,491

3,468

3,221

3,124

2,293

1,444

1,178

368

16

Go to 📚 Large Language Models

📚

LLM comparison

(12)

📊

LLM management

(4)

🧪

LLM testing

(4)

🎓

LLM training

(4)

🔨

LLM development

(3)

Gemini

Gemini

Claude

Claude

Mistral AI

Mistral AI

Ollama

Ollama

LLaMA

LLaMA

Google Bard

Google Bard

Numind

Numind

Hippocraticai

Hippocraticai

GOODY-2

GOODY-2

Prem AI

Prem AI

Vertex AI

Vertex AI

LongLLaMa

LongLLaMa

Qualcomm AI Hub

Qualcomm AI Hub

Heimdall

Heimdall

Wingman

Wingman

Langbase

Langbase

Custom Large Language Model Generator

Custom Large Language Model Generator

Z.ai

Z.ai

LLM models

LLM models

39,446

38,890

7,109

5,233

4,702

4,119

2,520

2,477

1,378

1,344

1,219

1,019

983

915

774

626

264

212

24

July 28, 2023

Dashboard

Edit AI

Langtail

LLM testing

2,554

5.0(1)The low-code platform for testing AI apps

Top alternatives

Localai

Localai

BenchLLM

BenchLLM

Rhesis AI

Rhesis AI

2,443

1,657

257

Overview

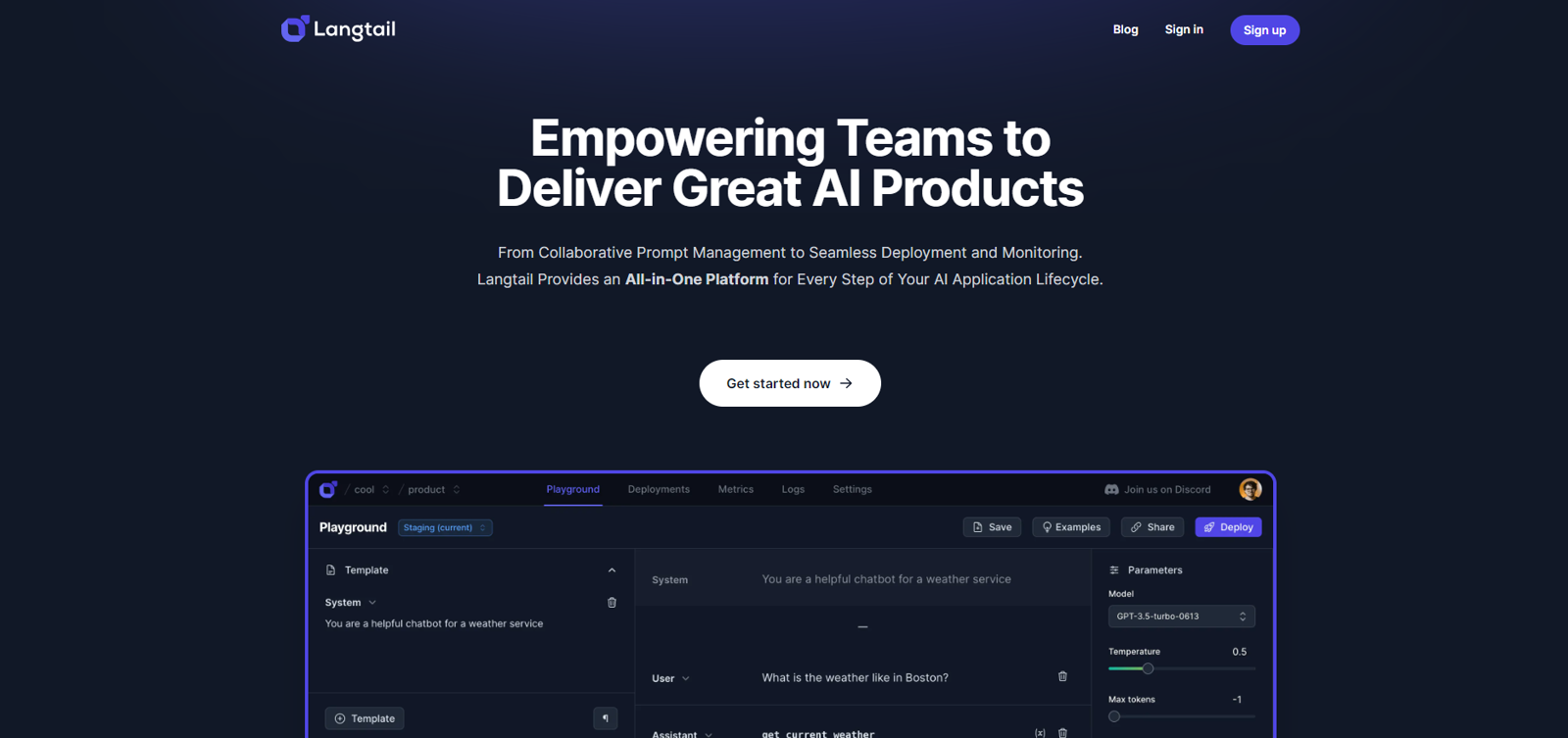

Langtail is a low-code platform for testing AI applications, focusing on LLM testing. Control unpredictable LLM outputs with Langtail's testing tools, which let you score tests using natural language, pattern matching, or custom code.

Optimize your AI models by experimenting with different parameters and prompts, using insights from detailed analytics. Integrate Langtail with a user-friendly, spreadsheet-like interface.

Enhance your AI app's security with AI Firewall to prevent prompt injections and information leaks. Streamline your AI development and testing process with Langtail.

Try it for free today.

Show more

Releases

Get notified when a new version of Langtail is released

Notify me

June 25, 2023

Petr Brzek

Initial release of Langtail.

Pricing

Pricing model

Freemium

Paid options from

$99/month

Billing frequency

Monthly

Save

🔗 Copy link

LLM testing

2,554

5.0(1)

Use tool

Save

Reviews

5.0

Average from 1 rating.

1

0

0

0

0

Prompts & Results

Add your own prompts and outputs to help others understand how to use this AI.

Pros and Cons

Pros

Non-technical team integration

Analytics and reporting capabilities

Comprehensive change management

Intelligent resource management

Prompts deployable as APIs

Easy system integration

Different prompt environments

Rapid debugging and testing

Dynamic LLM provider switching

Rate limiting feature

Continuous LLM integration

Prevents potential overspending

Detailed API logs

Prompts version management

Performance tracking

Cost and latency tracking

Provider outage management

Unified LLM management

Prompt testing tools

Prompts behavior monitoring

User registration in MVP

Project structuring in MVP

Detailed editor in MVP

Prompt-as-API endpoint deployment

Event logs for API calls

Continuous LLM prompt integration

Beta testing feedback incorporation

LLM workflow simplification

Test collection for LLM prompts

View 24 more pros

Cons

Still in development phase

No mobile application

Provider switching might disrupt performance

Rate limiting might slow usage

Might have waitlist delays

Integrations might disrupt existing workflows

Not fully tested

Potential latency issues

Effectiveness depends on user skills

Limited information about security measures

View 5 more cons

Q&A

What is LangTale?

LangTale is a platform designed to streamline the management of Large Language Model (LLM) prompts. It enables teams to handle LLM prompts more effectively and helps facilitate a deeper understanding of AI functionalities.

How does LangTale streamline the management of LLM prompts?

LangTale streamlines the management of LLM prompts by providing a centralized system where users can collaborate, manage versions, tweak prompts, run tests, maintain logs, and set environments. It eases the prompt integration process for non-tech team members and features analytics/reports, comprehensive change management, smart resource management, rapid debugging, and testing tools, along with environment settings for prompt testing and deployment.

What features does LangTale offer to simplify LLM prompt management?

LangTale offers features such as prompt integration for non-technical team members, monitoring LLM performance and tracking costs, latency, and more. You can track LLM outputs, maintain detailed API logs, easily revert changes with each new prompt version and manage resources intelligently by setting usage and spending limits. Additionally, LangTale provides debugging and testing tools, environment settings for each prompt, and dynamic LLM provider switching.

What is meant by prompt integration in LangTale?

Prompt integration in LangTale refers to the notion that each LLM prompt can be deployed as an API endpoint. These endpoints can be straightforwardly integrated into existing systems or applications, allowing for the reuse of prompts across different applications or systems with minimal disruption.

What kind of analytics and reporting capabilities does LangTale provide?

LangTale provides analytics and reporting capabilities to monitor the performance of Large Language Models. It provides features to track costs, latency, and more, helping users to make informed decisions based on these metrics.

How does LangTale support comprehensive change management?

Comprehensive change management in LangTale involves tracking LLM outputs, maintaining detailed API logs and readily reverting changes with each new version of a prompt. It provides clear visibility and control for all changes and versions.

+ Show 14 more

How can LangTale help in managing resources intelligently?

LangTale aids in intelligent resource management by allowing users to set usage and spending limits. This ensures efficient utilization of resources and prevents possible overspending.

How can I integrate LangTale into my existing systems?

LangTale can be smoothly integrated into existing systems and applications. Each LLM prompt can be deployed as an API endpoint, which allows for seamless integration and minimizes disruption to existing workflows.

What is the significance of LangTale's environment settings feature?

Environment settings in LangTale facilitate effective testing and implementation of LLM prompts. Users can set up different environments for each prompt which allows for more controlled and accurate testing of prompts in a variety of scenarios.

Can LangTale help in quick debugging and testing?

Yes, LangTale provides rapid debugging and testing tools. These tools assist in quickly identifying and resolving issues, ensuring that the prompts behave as expected and ensuring optimal performance.

What is the purpose of dynamic LLM provider switching in LangTale?

The dynamic LLM provider switching feature in LangTale allows for seamless switching between LLM providers in case of an outage or high latency with one provider. This feature ensures that application performance remains uninterrupted.

How is LangTale tailored for developers?

LangTale is tailored for developers by implementing rate limiting, continuous integration for LLMs, and intelligent LLM provider switching. It simplifies LLM prompt management by easily integrating with existing systems, setting up different environments for each prompt, rapidly debugging and testing, and switching between LLM providers dynamically.

How does LangTale support non-tech team members in managing LLM prompts?

LangTale supports non-tech team members by making LLM prompt integration and management accessible without requiring coding skills. Non-tech team members can partake in tweaking prompts, managing versions, running tests, maintaining logs, and setting environments.

What is the LangTale playground?

The LangTale Playground is the world's first playground supporting OpenAI function callings. It's a free tool where developers can experiment, tweak, and perfect their LLM prompts.

How can LangTale assist in effective testing and implementation?

LangTale facilitates effective testing and implementation by allowing users to set up different environments for each prompt. Rapid debugging, testing tools and test collections help in quickly identifying and addressing any issues, ensuring the prompts are working as expected.

What is the launch plan for LangTale?

LangTale's launch plan is to first have a private beta launch that will allow a select group of users to test the platform and provide feedback. After incorporating the feedback and ironing out any issues, LangTale will be launched publicly.

What is the intended user group for LangTale?

LangTale is intended for all who work with Large Language Model prompts. It tailors its features to cater to both technical developers, who seek efficient methods of integration, debugging, and environment setting, as well as non-technical team members who require a simplified method of LLM prompt management.

Who is behind the development of LangTale?

LangTale is developed by Petr Brzek, the co-founder of Avocode. His vision for LangTale was to fill a significant gap in the market for efficient tools to manage, version, and test prompts, making working with these powerful models more straightforward and efficient for all.

How can I join the LangTale's private beta launch?

Interested users can join the private beta launch of LangTale by joining the waitlist provided on their website.

What will happen during the private beta launch of LangTale?

During the private beta launch of LangTale, a select group of users will test the platform, provide feedback, and assist in identifying any issues or improvements. This process is meant to deliver a user-focused and effective solution before the public launch.

Ask a question

Submit

How would you rate Langtail?

Help other people by letting them know if this AI was useful.