What is the Nebius AI Studio Inference Service?

Nebius AI Studio's Inference Service allows users to utilize hosted open-source models for speedy and accurate inference results. This service is particularly suitable for those who may not have Machine Learning Operations (MLOps) experience, as it comes with a production-ready infrastructure ready for use. It includes an ultra-low latency feature for quick processing times, especially for users in Europe, where the data center is located. The platform caters to varied requirements by offering an option between quick processing at a higher cost or slower but more economical processing.

What types of models are available in Nebius AI Studio?

Nebius AI Studio offers a diverse range of open-source models, such as MetaLlama-3.1-8B-instruct, MetaLlama-3.1-405B-instruct, Mistral, Mixtral-8x22B-Instruct-v0.1, Ai2OLMo-7B-Instruct, and DeepSeek among others. They continuously update their library with new and diverse models.

Can I choose between different processing speeds in Nebius AI Studio?

Yes, Nebius AI Studio offers its users the flexibility to choose between different processing speeds based on their needs. Users can opt for quick processing at a slightly higher cost or go for slower yet more economical processing, allowing them to manage their costs betterby choosing a processing speed that best suits their task urgency and budget constraints.

What is the ultra-low latency feature of Nebius AI Studio's Inference Service?

The ultra-low latency feature of Nebius AI Studio's Inference Service ensures fast processing times. This is especially beneficial for users located in Europe (where the data center is located), guaranteeing them a swift time to the first token. This feature is designed for users who need quick results, thereby reducing the waiting time between the model's initiation and the delivery of results.

What benefits do I get if I use Nebius AI Studio in Europe?

Utilizing Nebius AI Studio in Europe delivers the benefit of ultra-low latency in processing times. This is due to the location of Nebius AI Studio's data center in Europe, which ensures the quickest response times for users based in this geography.

How can I earn credits using Nebius AI Studio?

Users have an opportunity to earn credits by building applications using Nebius AI Studio and open-source models. If an application is selected among the top 3 projects, authors can receive $1000 in Nebius AI Studio credits.

What is the user interface of Nebius AI Studio like?

Nebius AI Studio's interface is user-friendly and facilitates smooth user experiences. It simplifies the process of testing, comparing, and implementing AI models. Users can sign up and immediately start testing, comparing, and running AI models in their applications through this interface.

Can I build an application using Nebius AI Studio?

Yes, Nebius AI Studio offers the functionality to build applications using its platform and open-source models. The user's creativity sets the limit; applications could range from chatbots to data analyzers to code assistants and beyond. The authors of the top 3 projects have the opportunity to earn $1000 in Nebius AI Studio credits.

What does the cost efficiency of Nebius AI Studio's Inference Service look like?

Nebius AI Studio's Inference Service provides cost efficiency by allowing users to pay only for what they use, thereby meeting budget goals especially in Right-Angle Generation and contextual scenarios. The service also offers a choice between faster processing for quicker results at a higher cost, or slower processing that's more economically priced. New customers also receive $100 in free inference credits, further enhancing cost-efficiency.

How can I start testing, comparing, and running AI models in Nebius AI Studio?

Users can start testing, comparing, and running AI models in their applications by signing up for Nebius AI Studio and accessing the model playground. This web interface allows users to try out different AI models available in Nebius AI Studio without the need to write any code.

Where can I find available models and prices for Nebius AI Studio?

The pricing for various available models on Nebius AI Studio can be found on the pricing section of their website. It provides a detailed break-up of the input and output token costs for each model.

Do I get any welcome credits with Nebius AI Studio?

Yes, Nebius AI Studio provides $100 in free inference credits for new customers to try their product through the Playground or to spend on their inference workloads through the API.

Can I use Nebius AI Studio Inference Service for large production workloads?

Absolutely, Nebius AI Studio's Inference Service is designed specifically for large production workloads. The platform's production-ready infrastructure and high-quality open-source models make it a competent tool for handling large scale projects.

Can I request additional open-source models in Nebius AI Studio?

Yes, users can request additional open-source models in Nebius AI Studio. The request form for additional open-source models becomes available after you log into the platform.

How can I get a dedicated instance on Nebius AI Studio?

Users can get a dedicated instance either by allocating a server in the self-service console of Nebius AI Studio or by contacting their support team.

How secure is Nebius AI Studio and where does my data go?

Nebius AI Studio ensures high standards of security for user data. Their servers are located in Finland and are managed carefully to meet the European data security regulations. Nebius AI Studio does not provide specific details about where user data goes after processing but they underline their adherence to stringent European data security norms.

Are there any restrictions to earning Nebius AI Studio credits?

As per the offer, the welcome credits of $100 and the opportunity to earn $1000 in Nebius AI Studio credits for building an application are valid until January 15, 2025, and are available for new customers only. The offer is limited to one per customer and terms and conditions apply.

What are the popular AI models provided by Nebius AI Studio's Inference Service?

The exact models popularly used in Nebius AI Studio's Inference Service aren't specified on their website. However, they provide endpoints for a broad range of popular AI models. Users can choose from a diverse range of open-source models, including MetaLlama-3.1-8B-instruct, MetaLlama-3.1-405B-instruct, Mistral, Mixtral-8x22B-Instruct-v0.1, Ai2OLMo-7B-Instruct, DeepSeek, and many more.

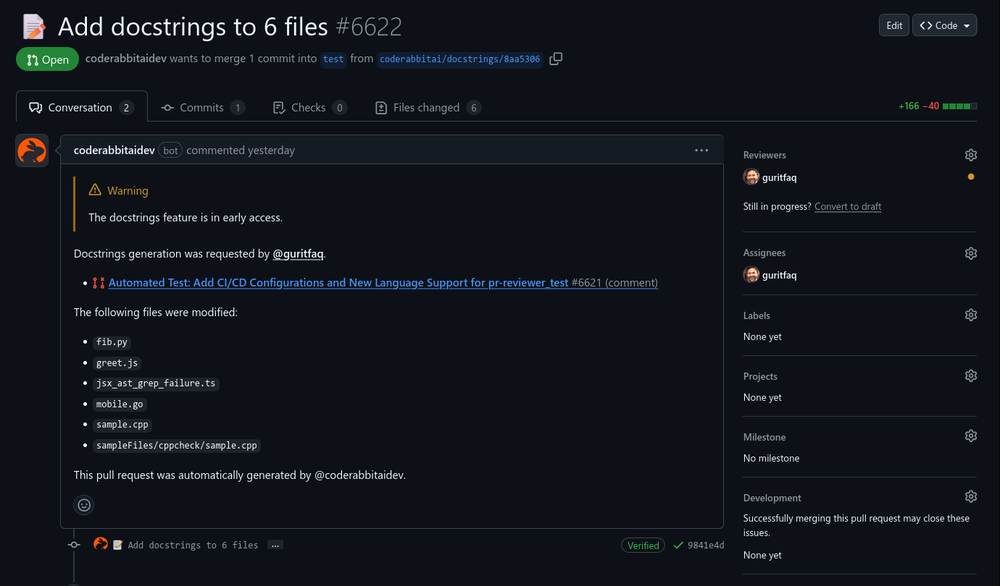

Reducing manual efforts in first-pass during code-review process helps speed up the "final check" before merging PRs

Reducing manual efforts in first-pass during code-review process helps speed up the "final check" before merging PRs Every AI model, one platform.1,65926Released 2mo agoFree + from $7.99/mo

Every AI model, one platform.1,65926Released 2mo agoFree + from $7.99/mo One platform for all AI inference needs.40314Released 1mo agoFree + from $0.04

One platform for all AI inference needs.40314Released 1mo agoFree + from $0.04